Introduction

When organizations adopt GitOps there are many challenges to face such as how do I manage secrets in git, what directory structure should I use for my repos, etc. One of the more vexing challenges is how do I integrate my CI processes with GitOps, aka CD.

A CI pipeline is largely a synchronous process that goes from start to end, i.e. we compile our source code, we build an image, push it out to deployments, run integration tests, etc in a continuous flow. Conversely GitOps follows an event driven flow, a change in git or in cluster state drives the reconciliation process to synchronize the state. As anyone who has worked with messaging systems knows, trying to get synchronous and asynchronous systems working well together can be akin to herding cats and hence why it is a vexing challenge.

In this blog article I will cover the three different approaches that are most often used along with the pros and cons of each approach and the use cases where it makes sense. I will cover some of the implementation details through the lens of OpenShift Pipelines (Tekton) and OpenShift GitOps (Argo CD).

A quick note on terminology, the shorthand acronyms CI (Continuous Integration) and CD (Continuous Deployment) will be used throughout the remainder of this article. CI is referring to pipelines for compiling applications, building images, running integration tests, and more covered by tools like Jenkins, Tekton, Github Actions, etc. When talking about CD we are meaning GitOps tools like Argo CD, Flux or RHACM that are used to deploy applications.

It’s important to keep this distinction in mind since tools like Jenkins are traditionally referred to as CI/CD tools, however when referencing CD here the intent is specifically GitOps tool which Jenkins is not.

Approaches

As mentioned there are three broad approaches typically used to integrate CI with CD.

1. CI Owned and Managed. In this model the CI tool completely owns and manages new deployments on it’s own though GitOps can still manage the provisioning of the manifests. When used with GitOps this approach often uses floating tags (aka dev, test, etc) or has the GitOps tool not track image changes so the CI tool can push new developments without impacting GitOps.

The benefit of this approach is that it continues to follow the traditional CI/CD approach that organizations have gotten comfortable with using tools like Jenkins thus reducing the learning curve. As a result many organizations start with this approach at the earliest stages of their GitOps journey.

The drawback of this model is that it runs counter to the GitOps philosophy that git is the source of truth. With floating tags there is no precision with regards to what image is actually being used at any given moment, if you opt to ignore image references what’s in git is definitely not what’s in the cluster since image references are never updated.

As a result I often see this used in organizations which are new to GitOps and have existing pipelines they want to reuse, it’s essentially step 1 of the GitOps journey for many people.

2. CI Owned, CD Participate’s. Here the CI tool owns and fully manages the deployment of new images but engages the GitOps tool to do the actual deployment, once the GitOps process has completed the CI tool validates the update. From an implementation point of view the CI pipeline will update the manifests, say a Deployment, in git with a new image tag along with a corresponding commit. At this point the pipeline will trigger and monitor the GitOps deployment via APIs in the GitOps tool keeping the entire process managed by the CI pipeline from start to end in a synchronous fashion.

The good part here is that it fully embraces GitOps in the sense that what is in git is what is deployed in the cluster. Another benefit is that it maintains a start-to-end pipeline which keeps the process easy to follow and troubleshoot.

The negative is the additional complexity of integrating the pipeline with the GitOps tool, essentially integrating a synchronous process (CI) with what is inherently an event-driven asynchronous activity (CD) can be challenging. For example, the GitOps tool may already be deploying a change when the CI tool attempts to initiate a new sync and the call fails.

This option makes sense in environments (dev/test) where there are no asynchronous gating requirements (i.e. human approval) and the organization has a desire to fully embrace GitOps.

3. CI Triggered, CD Owned. In this case we have a CI tool that manages the build of the application and image but for deployment it triggers an asynchronous event which will cause the GitOps tool to perform the deployment. This can be done in a variety of ways including a Pull Request (PR) or a fire-and-forget commit in git at which point the CD owns the deployment. Once the CD process has completed the deployment, it can trigger additional pipeline(s) to perform post-deployment tasks such as integration testing, notifications, etc.

When looking at this approach the benefit is we avoid the messiness of integrating the two tools directly as each plays in their own swimlane. The drawback is the pipeline is no longer a simple start-to-end process but turns into a loosely coupled asynchronous event-driven affair with multiple pipelines chained together which can make troubleshooting more difficult. Additionally, the sync process can happen for reasons unrelated to an updated deployment so chained pipelines need to be able to handle this.

Implementation

In this section we will review how to implement each approach, since I am using Red Hat OpenShift my focus here will be on OpenShift Pipelines (Tekton) and OpenShift GitOps (Argo CD) however the techniques should be broadly applicable to other toolsets. Additionally I am deliberately not looking at tools which fall outside of the purview of Red Hat Supported products. So while tools like Argo Events, Argo Rollouts and Argo Image Updater are very interesting they are not currently supported by Red Hat and thus not covered here.

Throughout the implementation discussion we will be referencing a Product Catalog application. This application is a three tier application, as per the diagram below, that consists of a Node.js single page application (SPA) running in an NGINX container connecting to a Java Quarkus API application which in turn performs CRUD actions against a Maria DB database.

As a result of this architecture there are separate pipelines for the client and server components but they share many elements. The implementation approaches discussed below are encapsulated in sub pipelines which are invoked as needed by the client and server for reuse. I’ve opted for sub-pipelines in order to better show the logic in demos, but it could just as easily be encapsulated into a custom tekton task.

From a GitOps perspective we have two GitOps instances deployed, a cluster scoped instance and a namespaced scoped instance. The cluster scoped instance is used to configure the cluster as well as resources required by tenants which need cluster-admin rights, things like namespaces, quotas, operators. In this use case the cluster scoped instance deploys the environment namespaces (product-catalog-dev, product-catalog-test and product-catalog-prod) as well as the Product Catalog teams namespaced GitOps instance.

This is being mentioned because you will see references to two different repos and this could be confusing, specifically the following two repos:

1. cluster-config. Managed by the Platform (Ops) team, this is the repo with the manifests deployed by the cluster scoped instance including the product-catalog teams namespaces and gitops instance in the tenants folder.

2. product-catalog. Managed by the application (product-catalog) team, this contains the manifests deployed the namespaced scoped instance. It deploys the actual application and is the manifest containing application image references.

If you are interested in seeing more information about how I organize my GitOps repos you can view my standards document here.

Common Elements

In all three approaches we will need to integrate with git for authentication purposes, in OpenShift Pipelines you can easily integrate a git token with pipelines via a secret as per the docs. The first step is creating the secret and annotating it, below is an example for github:

apiVersion: v1

data:

email: XXXXXXXXXXXX

password: XXXXXXXXX

username: XXXXXXXXX

kind: Secret

metadata:

annotations:

tekton.dev/git-0: https://github.com

name: github

namespace: product-catalog-cicd

type: kubernetes.io/basic-authThe second thing we need to do is to link it to the pipeline service account, since this account is created and managed by the Pipelines operator I prefer doing the linking after the fact rather than overwriting it with yaml deployed from git. This is done using a postsync hook in Argo (aka a Job) to make it happen. Below is the basic job, the serviceaccount and role aspects needed to go with this are available here.

apiVersion: batch/v1

kind: Job

metadata:

name: setup-local-credentials

annotations:

argocd.argoproj.io/hook: PostSync

argocd.argoproj.io/hook-delete-policy: HookSucceeded

spec:

template:

spec:

containers:

- image: registry.redhat.io/openshift4/ose-cli:v4.9

command:

- /bin/bash

- -c

- |

echo "Linking github secret with pipeline service account"

oc secrets link pipeline github

imagePullPolicy: Always

name: setup-local-credentials

serviceAccount: setup-local-credentials

serviceAccountName: setup-local-credentials

dnsPolicy: ClusterFirst

restartPolicy: OnFailure

terminationGracePeriodSeconds: 30CI Owned and Managed

This is the traditional approach that has been around since dinosaurs roamed the earth (T-Rex was a big fan of Hudson), so we will not go into great detail on this but cover some of the OpenShift Pipelines specifics from an implementation point of view.

In OpenShift, or for that matter Kubernetes, the different environments (dev/test/prod) will commonly be in different namespaces. In OpenShift Pipelines it creates a pipeline service account that by default the various pipelines use when running. In order to allow the pipeline to interact with the different environments in their namespaces we need to give the pipeline SA the appropriate role to do so. Here is an example of a Rolebinding in the development environment (product-catalog-dev namespace) giving edit rights to the pipeline service account in the cicd namespace where the pipeline is running.

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cicd-pipeline-edit

namespace: product-catalog-dev

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: edit

subjects:

- kind: ServiceAccount

name: pipeline

namespace: product-catalog-cicd

This would need to be done for each environment that the pipeline needs to interact with. Also note that I am taking the easy way and using the OOTB edit ClusterRole in OpenShift, more security conciousness organizations may wish to define a Role with more granular permissions.

If you are using floating tags in an enterprise registry (i.e. dev or test) you will need to set the imagePullPolicy to Always to ensure the new image gets deployed on a rollout. At this point a new deployment can be triggered in a task simply by running oc rollout restart.

CI Owned, CD Participate’s

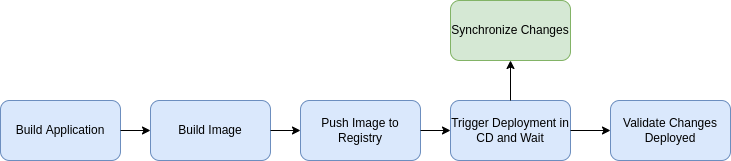

As discussed previously, in this approach the CI pipeline manages the flow from start-to-finish but instead of doing the deployment itself it defers it to CD, aka GitOps. To accomplish this, the pipeline will clone the manifest repo with all of the yaml managed by GitOps, update the image tag for the new image and then commit it back to git. It will then wait for the GitOps tool to perform the deployment and validate the results. This flow is encapsulated in the following pipeline:

This sub-pipeline is invoked by the server and client pipelines via the tkn CLI since we want to trigger this pipeline and wait for it to complete maintaining a synchronous process. Here is an example that calls this pipeline to deploy a new server image in the dev environment:

tkn pipeline start --showlog --use-param-defaults --param application=server --param environment=dev --prefix-name=server-gitops-deploy-dev --param tag=$(tasks.generate-id.results.short-commit)-$(tasks.generate-id.results.build-uid) --workspace name=manifest-source,claimName=manifest-source gitops-deploy

Let’s look at this gitops deployment sub-pipeline in a little more detail for each individual task.

1. acquire-lease. Since this pipeline is called by other pipelines there is a lease which acts as a mutex to ensure only one instance of this pipeline can run at a time. The details are not relevant to this article however for those interested the implementation was based on an article found here.

2. clone. This task clones the manifest yaml files into a workspace to be used in subsequent steps.

3. update-image. There are a variety of ways to update the image reference depending on how you are managing yaml in GitOps. If GitOps is deploying raw yaml, you many need something like yq to patch a deployment. If you are using a helm chart, yq again could help you update the values.yaml. in my case I am using kustomize which has the capability to override the image tag in an overlay with the following command:

kustomize edit set image <image-name>=<new-image>:<new-image-tag>

In this pipeline we have a kustomize task for updating the image reference. It takes parameters to the image name, new image name and tag as well as the path to the kustomize overlay. In my case the overlay is associated with the environment and cluster, you can see an example here for the dev environment in the home cluster.

4. commit-change. Once we have updated the image we need to commit the change back to git using a git task and running the appropriate git commands. In this pipeline the following commands are used:

if git diff --exit-code;

then

echo "No changes staged, skipping add/commit"

else

echo "Changes made, committing"

git config --global user.name "pipeline"

git config --global user.email "pipelines@nomail.com"

git add clusters/$(params.cluster)/overlays/$(params.environment)/kustomization.yaml

git commit -m 'Update image in git to quay.io/gnunn/$(params.application):$(params.tag)'

echo "Running 'git push origin HEAD:$(params.git_revision)'"

git push origin HEAD:$(params.git_revision)

fi

One thing to keep in mind is that it is possible for the pipeline to be executed when no code has been changed, for example testing the pipeline with the same image reference. The if statement here exists as a guard for this case.

5. gitops-deploy. This is where you trigger OpenShift GitOps to perform the deployment. In order to accomplish this the pipeline needs to use the argocd CLI to interact with OpenShift GitOps which in turns requires a token before the pipeline runs.

Since we are deploying everything with a cluster level GitOps, including the namespace GitOps that is handling the deployment here, we can have the cluster level GitOps create a local account and then generate a corresponding token for that account in the namespaced GitOps instance. A job running as a PostSync hook does the work here, it checks if the local account already exists and if not creates it along with a token which is stored as a secret in the CICD namespace for the pipeline to consume.

apiVersion: batch/v1

kind: Job

metadata:

name: create-pipeline-local-user

annotations:

argocd.argoproj.io/hook: PostSync

argocd.argoproj.io/hook-delete-policy: HookSucceeded

spec:

template:

spec:

containers:

- image: registry.redhat.io/openshift-gitops-1/argocd-rhel8:v1.4.2

command:

- /bin/bash

- -c

- |

export HOME=/home/argocd

echo "Checking if pipeline account already there..."

HAS_ACCOUNT=$(kubectl get cm argocd-cm -o jsonpath={.data."accounts\.pipeline"})

if [ -z "$HAS_ACCOUNT" ];

then

echo "Pipeline account doesn't exist, adding"

echo "Getting argocd admin credential..."

if kubectl get secret argocd-cluster;

then

# Create pipeline user

kubectl patch cm argocd-cm --patch '{"data": {"accounts.pipeline": "apiKey"}}'

# Update password

PASSWORD=$(oc get secret argocd-cluster -o jsonpath="{.data.admin\.password}" | base64 -d)

argocd login --plaintext --username admin --password ${PASSWORD} argocd-server

TOKEN=$(argocd account generate-token --account pipeline)

kubectl create secret generic argocd-env-secret --from-literal=ARGOCD_AUTH_TOKEN=${TOKEN} -n ${CICD_NAMESPACE}

else

echo "Secret argocd-cluster not available, could not interact with API"

fi

else

echo "Pipeline account already added, skipping"

fi

env:

# The CICD namespace where the token needs to be deployed to

- name: CICD_NAMESPACE

value: ""

imagePullPolicy: Always

name: create-pipeline-local-user

serviceAccount: argocd-argocd-application-controller

serviceAccountName: argocd-argocd-application-controller

dnsPolicy: ClusterFirst

restartPolicy: OnFailure

terminationGracePeriodSeconds: 30Since the argocd-argocd-application-controller already has access to the various namespaces we just reuse it for the job since it needs to create a secret in the product-catalog-cicd namespace, again more security conscious organizations may wish to use more granular permissions.

Finally we also need to give this local account, pipeline, appropriate RBAC permissions in the namespaced GitOps ionstance as well. The following roles are defined in the argocd CR for the namespaced instance:

spec:

...

rbac:

defaultPolicy: 'role:readonly'

policy: |

p, role: pipeline, applications, get, apps-product-catalog/*, allow

p, role: pipeline, applications, sync, apps-product-catalog/*, allow

g, product-catalog-admins, role:admin

g, system:cluster-admins, role:admin

g, pipeline, role: pipeline

scopes: '[accounts,groups]'Once we have the integration in play we can use a task in the pipeline to trigger a sync in GitOps via the argocd CLI. Unfortunately this part can be a bit tricky depending on how you have GitOps configured given it is an asynchronous process and timing issues can occur. For example if you are using webhooks with GitOps it’s quite possible that the deploy is already in progress and trying to trigger it again will fail.

In this pipeline we took the example Argo CD Sync and Wait task in Tekton Hub and modified it to make it somewhat more resilient. The key change was having the task execute argocd app wait first and then validate if the image was already updated before performing an explicit sync. The full task is available here, but here is the portion doing the work:

if [ -z "$ARGOCD_AUTH_TOKEN" ]; then

yes | argocd login "$ARGOCD_SERVER" --username="$ARGOCD_USERNAME" --password="$ARGOCD_PASSWORD";

fi

# Application may already be syncing due to webhook

echo "Waiting for automatic sync if it was already triggered"

argocd app wait "$(params.application-name)" --health "$(params.flags)"

echo "Checking current tag in namespace $(params.namespace)"

CURRENT_TAG=$(oc get deploy $(params.deployment) -n $(params.namespace) -o jsonpath="{.spec.template.spec.containers[$(params.container)].image}" | cut -d ":" -f2)

if [ "$CURRENT_TAG" = "$(params.image-tag)" ]; then

echo "Image has been synced, exiting"

exit 0

fi

echo "Running argocd sync..."

argocd app sync "$(params.application-name)" --revision "$(params.revision)" "$(params.flags)"

argocd app wait "$(params.application-name)" --health "$(params.flags)"

CURRENT_TAG=$(oc get deploy $(params.deployment) -n $(params.namespace) -o jsonpath="{.spec.template.spec.containers[$(params.container)].image}" | cut -d ":" -f2)

if [ "$CURRENT_TAG" = "$(params.image-tag)" ]; then

echo "Image has been synced"

else

echo "Image failed to sync, requested tag is $(params.image-tag) but current tag is $CURRENT_TAG"

exit 1;

fiAlso note that this task validates the required image was deployed and fails the pipeline if it was not deployed for any reason. I suspect this task will likely require some further tuning based on comments in this Argo CD issue.

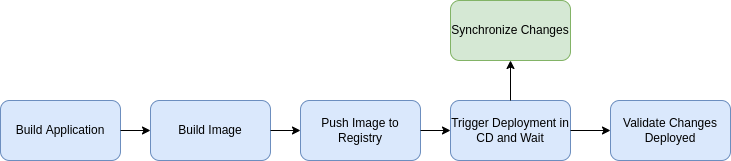

CI Triggered, CD Owned

As a refresher, in this model the pipeline triggers the deployment operation in GitOps but at that point the pipeline completes with the actual deployment being owned by GitOps. This can be done via a Pull Request (PR), fire-and-forget commit, etc but in the case we will look at this being done by a PR to support gating requirements requiring human approval which is an asynchronous process.

This pipeline does require a secret for GitHub in order to create the PR however we simply reuse the same secret that was provided earlier.

The pipeline that provides this capability in the product-catalog demo is as follows:

This pipeline is invoked by the server and client pipelines via a webhook since we are treating this as an event.

In this pipeline the following steps are performed:

1. clone. Clone the git repo of manifest yaml that GitOps is managing

2. branch. Create a new branch in the git repo to generate the PR from, in the product catalog I use push-<build-id> as the branch identifier.

3. patch. Update the image reference using the same kustomize technique that we did previously.

4. commit. Commit the change and push it in the new branch to the remote repo.

5. prod-pr-deploy. This task creates a pull request in GitHub, the GitHub CLI makes this easy to do:

gh pr create -t "$(params.title)" -b "$(params.body)"

One thing to note in the pipeline is that it passes in links to all of the gating requirements such as image vulnerabilities in Quay and RHACS as well as static code analysis from Sonarqube.

Once the PR is created the pipeline ends, when the application is sync’ed by Argo CD it runs a post-sync hook Job to start a new pipeline to run the integration tests and send a notification if the tests fail.

apiVersion: batch/v1

kind: Job

metadata:

name: post-sync-pipeline

generateName: post-sync-pipeline-

annotations:

argocd.argoproj.io/hook: PostSync

argocd.argoproj.io/hook-delete-policy: HookSucceeded

spec:

template:

spec:

containers:

- image: registry.access.redhat.com/ubi8

command:

- "curl"

- "-X"

- "POST"

- "-H"

- "Content-Type: application/json"

- "--data"

- "{}"

- "http://el-server-post-prod.product-catalog-cicd:8080"

imagePullPolicy: Always

name: post-sync-pipeline

dnsPolicy: ClusterFirst

restartPolicy: OnFailure

terminationGracePeriodSeconds: 30Conclusion

We reviewed the three different approaches to integrating OpenShift Pipelines and OpenShift GitOps and then examined a concrete implementation of each approach.