In this article we will look at how to build Scala applications in OpenShift. While Scala is a Java Virtual Machine (JVM) language, applications written in Scala typically use a build tool called SBT (https://www.scala-sbt.org) which is not currently supported by OpenShift. However the great thing about OpenShift is that it is extensible and adding support for Scala applications is very straightforward as will be shown in this article.

As a quick level set, when building and deploying applications for OpenShift there are typically three options available as follows:

- Source-To-Image. This is a capability available in OpenShift via builder images that enable developers to simply point a builder to a git repository and have the application compiled with an image created automatically by the platform.

- Jenkins Pipeline. In this scenario we leverage Jenkins to create a pipeline for our application and use an appropriate build agent (i.e. slave) for our technology. Out of the box OpenShift includes agents for Maven and Nodejs but not Scala/SBT.

- Build the container image outside of OpenShift and push the resulting image into the internal OpenShift registry. This is essentially treating the platform as a Container-As-A-Service (CaaS) rather then leveraging the Platform-As-A-Service (PaaS) capabilities available in OpenShift.

All of the above approaches are perfectly valid and the choice enterprises make in this regard are typically driven by a variety of factors including technology used, toolset and organizational requirements.

For the purposes of this article I am going to focus on building Scala applications using the second option, Jenkins Pipelines which is typically my preferred approach for production applications. Note that this option is not exclusive of Option 1, you can certainly use S2I in a Jenkins pipeline to build your application from source. However I do prefer using a build agent simply because there are a variety of activities (code scanning, pushing to repository, pre-processing, etc) that need to be performed as part of the build process that can often be application specific and a build agent simply gives us more flexibility in this regard.

Additionally I typically recommend to my customers to use Red Hat’s JDK image whenever possible in order to benefit from the support that is provided as part of an OpenShift (or RHEL) subscription. With a build agent this is a natural activity as part of the pipeline, with S2I you would need to use build chaining which isn’t quite as straightforward. Having said that, if you are interested in the S2I approach an example of creating an S2I enabled image is available here and it works well.

Creating the build agent

In OpenShift the provided Jenkins image includes the Jenkins kubernetes-plugin. This plugin enables Jenkins to spin up build agents in the Kubernetes environment as needed to support builds. Separate and distinct build agents are typically used to support different technologies and build tools. As mentioned previously, OpenShift includes two build agents, Maven and Node.js, but does not provide a build agent for Scala or SBT.

Fortunately creating our own build agent is quite easy to do since OpenShift provides a base image to inherit from. If using the open source version of OpenShift, OKD, you can use the base image openshift/jenkins-slave-base-centos7 whereas if using the enterprise version of OpenShift I would recommend using the rhel7 version openshift/jenkins-slave-base-rhel7.

In this example we will use the centos7 version of the image. To create the agent image we need to create a Dockerfile defining the image, our example uses the Dockerfile below. This Dockerfile is also available in the github repo sbt-slave.

FROM openshift/jenkins-slave-base-centos7 MAINTAINER Gerald Nunn <gnunn@redhat.com> ENV SBT_VERSION 1.2.6 ENV SCALA_VERSION 2.12.7 ENV IVY_DIR=/var/cache/.ivy2 ENV SBT_DIR=/var/cache/.sbt USER root RUN INSTALL_PKGS="sbt-$SBT_VERSION" \ && curl -s https://bintray.com/sbt/rpm/rpm > bintray-sbt-rpm.repo \ && mv bintray-sbt-rpm.repo /etc/yum.repos.d/ \ && yum install -y --enablerepo=centosplus $INSTALL_PKGS \ && rpm -V $INSTALL_PKGS \ && yum install -y https://downloads.lightbend.com/scala/$SCALA_VERSION/scala-$SCALA_VERSION.rpm \ && yum clean all -y USER 1001

Notice that this image defines environment variables for the Scala and SBT versions. While the image is built with specific versions pre-loaded, SBT will automatically use the version specified in your build if it differs. To build the image, use the following command:

docker build . -t jenkins-slave-sbt-centos7After building the image, you can view the image as follows:

$ docker images | grep jenkins-slave-sbt-centos7 jenkins-slave-sbt-centos7 latest d2a60298e18d 6 weeks ago 1.23GB

Next we need to make the image available to OpenShift, one option is to directly push it into your OpenShift registry. Another option is to push it into an external registry which is what we will do here by pushing the image into Docker Hub. Note you will need an account on Docker Hub in order to do this. The first step to push the image into Docker Hub (or other registry) is to tag the image with the repository. My repository in Docker Hub is gnunn so that is what I am using here, your repository will be different so please change gnunn to the name of your repository.

docker tag jenkins-slave-sbt-centos7:latest gnunn/jenkins-slave-sbt-centos7:latestAfter that we can now push the image, again change gnunn to the name of your repository:

docker push gnunn/jenkins-slave-sbt-centos7:latestBuilding the Application in OpenShift

At this point we are almost ready to start building our Scala application. The first thing we need to do is create a project in OpenShift for the example. We can do that using the example below calling the project scala-example.

oc new-project scala-example

Next we need to configure Jenkins to be aware of our new agent image. This can be done in a couple of different ways. The easiest but most manual way is to simply login into Jenkins and update the Kubernetes plugin to add this new agent image via the Jenkins console.

However I’m not a fan of this approach since it is manual and requires the Jenkins configuration to be updated every time a new instance of Jenkins is deployed. A better approach is to update the configuration via a configmap which the Kubernetes plugin in Jenkins will use to set it’s configuration. An example configmap is shown below:

apiVersion: v1 kind: ConfigMap metadata: labels: app: cicd-pipeline role: jenkins-slave name: jenkins-slaves data: sbt-template: |- <org.csanchez.jenkins.plugins.kubernetes.PodTemplate> <inheritFrom></inheritFrom> <name>sbt</name> <privileged>false</privileged> <alwaysPullImage>false</alwaysPullImage> <instanceCap>2147483647</instanceCap> <idleMinutes>0</idleMinutes> <label>sbt</label> <serviceAccount>jenkins</serviceAccount> <nodeSelector></nodeSelector> <customWorkspaceVolumeEnabled>false</customWorkspaceVolumeEnabled> <workspaceVolume class="org.csanchez.jenkins.plugins.kubernetes.volumes.workspace.EmptyDirWorkspaceVolume"> <memory>false</memory> </workspaceVolume> <volumes /> <containers> <org.csanchez.jenkins.plugins.kubernetes.ContainerTemplate> <name>jnlp</name> <image>docker.io/gnunn/jenkins-slave-sbt-centos7</image> <privileged>false</privileged> <alwaysPullImage>false</alwaysPullImage> <workingDir>/tmp</workingDir> <command></command> <args>${computer.jnlpmac} ${computer.name}</args> <ttyEnabled>false</ttyEnabled> <resourceRequestCpu>200m</resourceRequestCpu> <resourceRequestMemory>512Mi</resourceRequestMemory> <resourceLimitCpu>2</resourceLimitCpu> <resourceLimitMemory>4Gi</resourceLimitMemory> <envVars/> </org.csanchez.jenkins.plugins.kubernetes.ContainerTemplate> </containers> <envVars/> <annotations/> <imagePullSecrets/> </org.csanchez.jenkins.plugins.kubernetes.PodTemplate>

Please note this line in the configuration:

<image>docker.io/gnunn/jenkins-slave-sbt-centos7</image>

The reference here will need to be updated for the registry and repository where you pushed the image. If you are using Docker Hub, again change gnunn to your repository. Once you have updated that line, save it into a file called jenkins-slaves.yaml. We can then add the configmap to our project in OpenShift using the following command:

oc create -f jenkins-slaves.yamlIn order to test the build process we are going to need an application. I’ve forked a simple Scala microservice application to the github repo akka-http-microservice. I’ve made some minor modifications to it, notably updating the versions of Scala and SBT as well as adding the assembly plugin to create a fat jar.

First we will create a new build-config that the pipeline will use to feed the generated Scala artifact into the Red Hat JDK image. This build-config will still use source-to-image but will do so using a binary build since we will have already have built the Scala JAR file using our build agent.

If you are using Red Hat’s OpenShift Container Platform, you can create the build using the existing Java S2I image that Red Hat provides:

oc new-build redhat-openjdk18-openshift:1.2 --name=scala-example --binary=true

If you are using the opensource OpenShift OKD where the above image is not available, you can use the Fabric8 opensource Java S2I image.

oc create -f https://raw.githubusercontent.com/gnunn1/openshift-notes/master/fabric8-s2i-java/imagestream.yaml oc new-build fabric8-s2i-java:3.0-java8 --name=scala-example --binary=true

Next we create a new application around the build, expose it to the outside word via a route and disable triggers since we want the pipeline to control the deployment:

oc new-app scala-example --allow-missing-imagestream-tags oc expose dc scala-example --port=9000 oc expose svc scala-example oc set triggers dc scala-example --containers='scala-example' --from-image='scala-example:latest' --manual=true

Note we specify --allow-missing-imagestream-tags because no images have been created at this point and thus the imagestream has no tags associated with it.

Now we need to create an OpenShift pipeline with a corresponding pipeline in a Jenkins file, below is the pipeline this example will be using.

--- apiVersion: v1 kind: BuildConfig metadata: annotations: pipeline.alpha.openshift.io/uses: '[{"name": "jenkins", "namespace": "", "kind": "DeploymentConfig"}]' labels: app: scala-example name: scala-example name: scala-example-pipeline spec: triggers: - type: GitHub github: secret: secret101 - type: Generic generic: secret: secret101 runPolicy: Serial source: type: Git git: uri: 'https://github.com/gnunn1/akka-http-microservice' ref: master strategy: jenkinsPipelineStrategy: jenkinsfilePath: Jenkinsfile type: JenkinsPipeline

Save the pipeline as pipeline.yaml and then create it in OpenShift using the following command, a Jenkins instance will be deployed automatically when you create the pipeline:

cc create -f pipeline.yaml

Note that the pipeline above is referencing a Jenkinsfile that is included in the source repository in git, you can view it here. This Jenkins file contains a simple pipeline that simply compiles the application, creates the image and then deploys it. For simplicity in this example we will do it all in the same project where the pipeline lives but typically you would deploy the application into separate projects representing individual environments (DEV, QA, PROD, etc) and not in the same project as Jenkins and the pipeline.

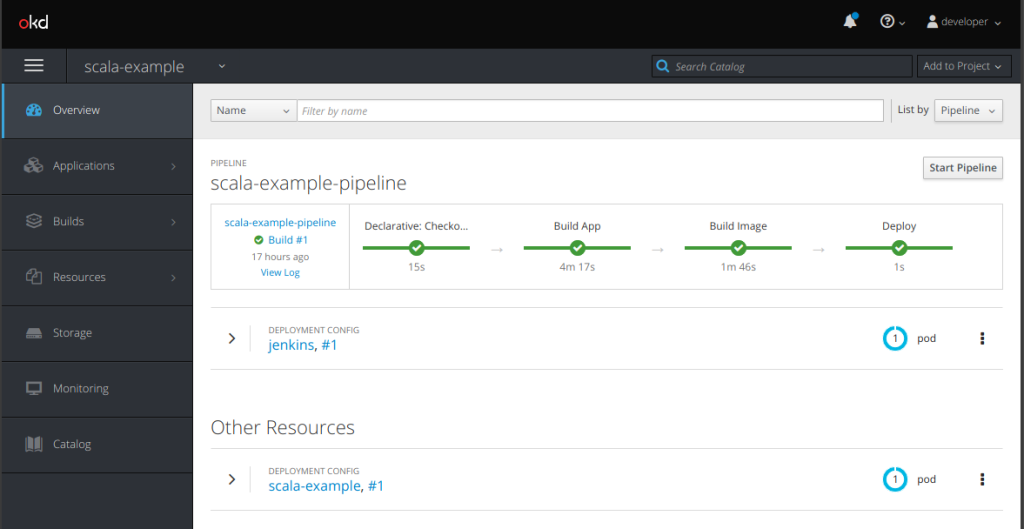

At this point you can now build the application, got to the Builds > Pipelines menu on the left and select the scala-example-pipeline and click the Start Pipeline button.

At this point the pipeline will start though it may take a couple of minutes to start and perform all of it’s tasks so be patient, however once the pipeline is complete you will see the application running.

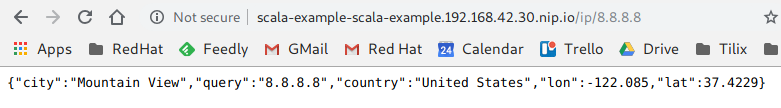

Once the pipeline is complete the application should be running and can be tested, to do so click on the route. You will see an error because the service doesn’t expose a service at the context root, add /ip/8.8.8.8 at the end of the URL and you should see a response as per below.