Introduction

I’ve been a heavy user of OpenShift GitOps (aka Argo CD) for quite awhile now as you can probably tell from my numerous blog posts. While I run a single cluster day to day to manage my demos and other work I do, I often have the need to spin up other clusters in the public cloud to test or use specific features available in a particular cloud provider.

Bootstrapping OpenShift GitOps into these clusters is always a multi-step affair that involves logging into the cluster, deploying the OpenShift GitOps operator and then finally deploying the cluster configuration App of App for this specific cluster. Wouldn’t it be great if there was a tool out there that could make this easier and help me manage multiple clusters as well? Red Hat Advanced Cluster Manager (RHACM) says hold my beer…

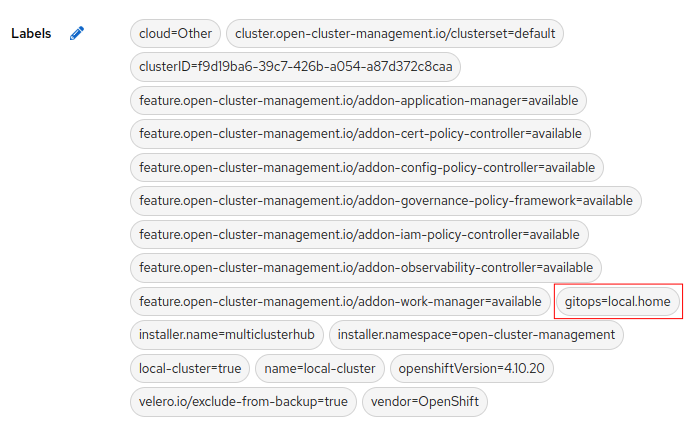

In this article we look at how to use RHACM’s policies to deploy OpenShift GitOps plus the correct cluster configuration across multiple clusters. The relationship between the cluster and the cluster configuration to select will be specified by labeling clusters in RHACM. Cluster labels can be applied whenever you create or import a cluster.

Why RHACM?

One question that may arise in your mind is why use RHACM for this versus using OpenShift GitOps in a hub and spoke model (i.e. an OpenShift GitOps in a central cluster that pushes other OpenShift GitOps instance to other clusters). RHACM provides a couple of compelling benefits here:

1. RHACM uses a pull model rather then a push model. On managed clusters RHACM will deploy an agent that will pull policies and other configuration from the hub cluster, OpenShift GitOps on the other uses a push model where it needs the ability to access the cluster directly. In environments with stricter network segregation and segmentation, which includes a good percentage of my customers, the push model is problematic and often requires jumping through hoops with network security to get firewalls opened.

2. RHACM supports templating in configuration policies. Similar to Helm lookups (which Argo CD doesn’t support at the moment, grrrr), RHACM provides the capability to lookup information from a variety of sources on both the hub and remote clusters. This capability enables us to leverage RHACM’s ability to label clusters to select the specific cluster configuration we want generically.

As a result RHACM makes a compelling case for managing the bootstrap process of OpenShift GitOps.

Bootstrapping OpenShift GitOps

To bootstrap OpenShift GitOps into a managed cluster at a high level we need to create a policy in RHACM that includes ConfigurationPolicy objects to deploy the following:

1. the OpenShift GitOps operator

2. the initial cluster configuration Argo CD Application which in my case is using App of App (or ApplicationSet but more on this below)

A ConfigurationPolicy is simply a way to assert the existence or non-existence of either a complete or partial kubernetes object on one or more clusters. By including a remediationAction of enforce in the ConfigurationPolicy, RHACM will automatically deploy the specified object if it is missing. Hence why I like referring to this capability as “GitOps by Policy”.

For deploying the OpenShift GitOps operator, RHACM has an example policy for this already that you can find in the Stolostron github organization in their policy collection repo here.

In my case I’m deploying my own ArgoCD CustomResource to support some specific resource customizations I need, you can find my version of that policy in my repo here. Note that an ACM Policy can contain many different policy types, thus in my GitOps policy you will see a few different embedded ConfigurationPolicy objects for deploying/managing different Kubernetes objects.

There’s not much need to review these policies in detail as they simply deploy the OLM Subscription required for the OpenShift GitOps operator. However the PlacementRule is interesting since as the name implies this determines which clusters the policy will be placed against. My PlacementRule is as follows:

apiVersion: apps.open-cluster-management.io/v1 kind: PlacementRule metadata: name: placement-policy-gitops spec: clusterConditions: - status: "True" type: ManagedClusterConditionAvailable clusterSelector: matchExpressions: - { key: gitops, operator: Exists, values: [] }

This placement rule specifies that any cluster that has the label key “gitops” will automatically have the OpenShift GitOps operator deployed on it. In the next policy we will use the value of this “gitops” label to select the cluster configuration to deploy, however before looking at that we need to digress a bit to discuss my repo/folder structure for cluster configuration.

At the moment my cluster configuration is stored in a single cluster-config repository. In this repository there is a folder structure under clusters where I keep a set of overlays that are cluster specific in the /clusters folder, each overlay is named after the cluster.

Within each of those folders is a Helm chart deployed as the bootstrap application that generates a set of applications following Argo’s App of App pattern. This bootstrap application is always stored under each cluster in a specific and identical folder, argocd/bootstrap.

I love kustomize so I am using kustomize to generate output from the Helm chart and then apply any post-patches that are needed, for example from my local.home cluster:

apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization helmCharts: - name: argocd-app-of-app version: 0.2.0 repo: https://gnunn-gitops.github.io/helm-charts valuesFile: values.yaml namespace: openshift-gitops releaseName: argocd-app-of-app-0.2.0 resources: - ../../../default/argocd/bootstrap patches: - target: kind: Application name: compliance-operator patch: |- - op: replace path: /spec/source/path value: 'components/apps/compliance-operator/overlays/scheduled-master' - op: replace path: /spec/source/repoURL value: 'https://github.com/gnunn-gitops/cluster-config'

The reason why post-patches may be needed is that I have a cluster called default that has the common configuration for all clusters (Hmmm, maybe I should rename this to common?). You can see this default configuration referenced under resources in the above example.

Therefore a cluster inheriting from default may need to patch something to support a specific configuration. Patches are typically modifying the repoURL or the path of the Argo CD Application to point to a cluster specific version of the application, typically under the /clusters/<cluster-name>/apps folder. In the example above I am patching the compliance operator to use a configuration that only includes master nodes since my local cluster is a Single-Node OpenShift (SNO) cluster.

You may be wondering why I’m using a Helm chart instead of an ApplicationSet here. While I am very bullish on the future of ApplicationSets at the moment they are lacking three key features I want for this use case:

* No support for sync waves to deploy applications in order, i.e. deploy Sealed-Secrets or cert-manager before other apps that leverage them;

* Insufficient flexibility in templating in terms of being able to dynamically include or exclude chunks of yaml; and

* No integration with the Argo CD UI like you get with App of Apps (primitive though it may be)For these reasons I’m using a Helm chart to template my cluster configuration instead of ApplicationSets, once these limitations have been addressed I will switch to them in a heartbeat.

So with that digression out of the way, let’s look at the specific ConfigurationPolicy in my GitOps Policy that deploys the cluster configuration:

apiVersion: policy.open-cluster-management.io/v1 kind: ConfigurationPolicy metadata: name: policy-managed-openshift-gitops-bootstrap-app spec: remediationAction: enforce severity: low namespaceSelector: exclude: - kube-* include: - default object-templates: - complianceType: musthave objectDefinition: apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: cluster-config-bootstrap namespace: openshift-gitops labels: gitops.ownedBy: cluster-config spec: destination: namespace: openshift-gitops server: https://kubernetes.default.svc project: default source: # path: clusters/{{ fromSecret "open-cluster-management-agent" "hub-kubeconfig-secret" "cluster-name" | base64dec }}/argocd/bootstrap path: clusters/{{ fromClusterClaim "gitops" }}/argocd/bootstrap repoURL: https://github.com/gnunn-gitops/cluster-config.git targetRevision: main syncPolicy: automated: prune: false selfHeal: true

If you look at it things are pretty straightforward, however note that the path used in the Argo CD Application being deployed has been templated:

clusters/{{ fromClusterClaim “gitops” }}/argocd/bootstrap

In this case fromClusterClaim is retrieving the value of the gitops label, as a result for my local.home cluster this path becomes clusters/local.home/argocd/bootstrap once the template substitution is done by RHACM, at this point OpenShift GitOps merrily starts configuring the cluster based on the provided path, If the GitOps label was set to rhpds the path becomes clusters/rhpds/argocd/bootstrap. This technique can be used whether your clusters are in one repo or each cluster has it’s own repo but you do need to be consistent.

Similarly if you prefer to drive the configuration off of the cluster name you can use the template function to retrieve the name from hub-kubeconfig-secret that exists on each managed cluster as per the commented line above:

clusters/{{ fromSecret “open-cluster-management-agent” “hub-kubeconfig-secret” “cluster-name” | base64dec }}/argocd/bootstrap

Managing OpenShift GitOps

At the most basic level having RHACM bootstrap OpenShift GitOps allows us to centrally manage the configuration of the GitOps operator rather then having to go cluster to cluster manually, for example to add a new resource customization. However RHACM provides a number of additional capabilities OOTB that are very useful when managing OpenShift GitOps across multiple clusters.

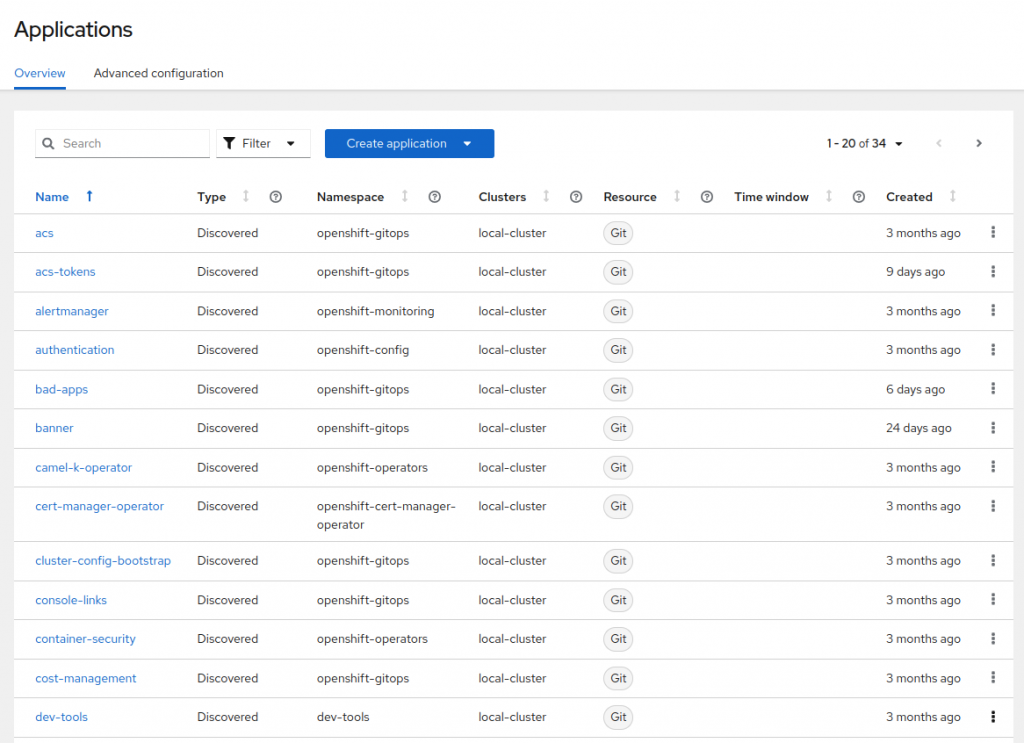

First RHACM includes Argo CD (which is the upstream of OpenShift GitOps) applications in it’s Application view. This enables platform operators to quickly and easily view the status of their Application objects (is it out-of-sync, is it degraded, etc) regardless of the cluster it is deployed.

You can then drill in to see the status of any application regardless of the cluster it is deployed on:

While this feature is great, wouldn’t it be even more awesome if I could quickly find all of the Argo CD Application objects that were in a bad state (Out-of-Sync, Degraded etc)? This is where the Search facility in RHACM comes in allowing you to find Applications across the different clusters based on the criteria you want including Application status. Here is an example of finding all Degraded applications:

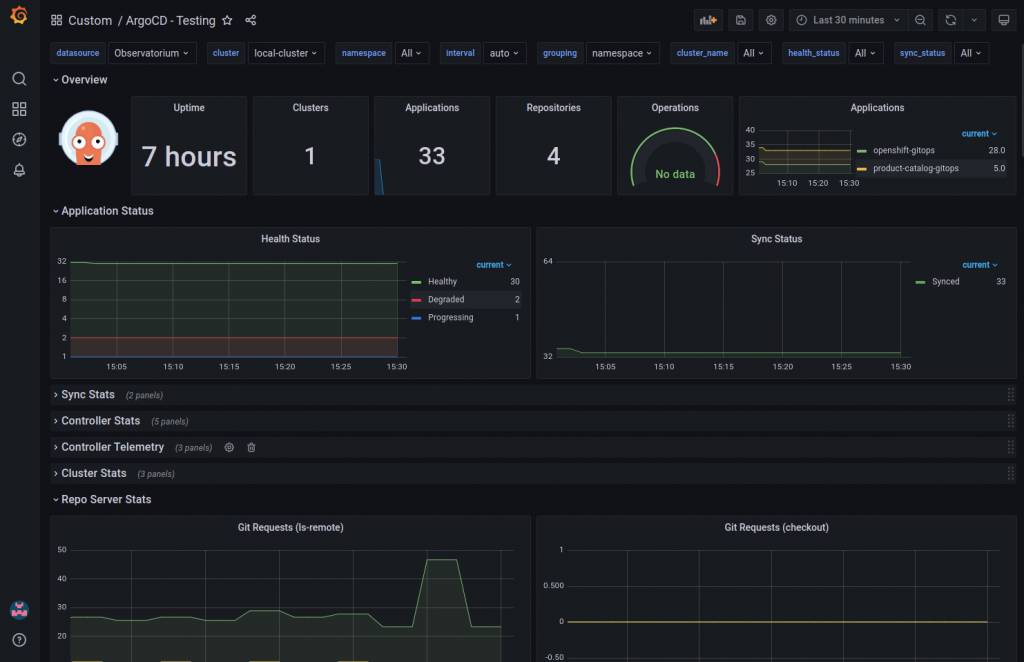

Finally RHACM includes the ability to design your own Dashboards with it’s Observability feature. Observability deploys a monitoring stack on the hub cluster that collects and aggregates metrics from all of the remote clusters. Dashboards are designed in Grafana with full support for the standard Prometheus Query Language (PromQL).

There is a nice example of an Argo CD dashboard in GitHub which I deployed in my cluster as an example, note the two degraded Application objects from my previous Search example:

Conclusion

In this blog we saw how RHACM can be used to effectively manage the bootstrapping of OpenShift GitOps and cluster configuration in a multi-cluster environment. We also reviewed some of the features and capabilities that RHACM brings into play to manage OpenShift GitOps, as well as Community Argo CD, across multiple clusters.