In this blog we will see how to integrate the External Secrets operator with OpenShift monitoring so alerts are generated when ExternalSecret resources fail to be synchronized. This will be a short blog, just the facts ma’am!

To start with you need to enable the user monitoring stack in OpenShift and configure the platform alertmanager to work with user alerts. In the openshift-monitoring namespace there is a configmap called cluster-monitoring-config, configure it so it includes the fields below:

apiVersion: v1 kind: ConfigMap metadata: name: cluster-monitoring-config namespace: openshift-monitoring data: config.yaml: | enableUserWorkload: true alertmanagerMain: enableUserAlertmanagerConfig: true

Once you have done that, we need to deploy some PodMonitor resources so that the user monitoring Prometheus instance in OpenShift will collect the metrics we need. Note these need to be in the same namespace as where the External Secrets operator is installed, in my case that is external-secrets.

apiVersion: monitoring.coreos.com/v1 kind: PodMonitor metadata: name: external-secrets-controller namespace: external-secrets labels: app.kubernetes.io/name: external-secrets-cert-controller spec: selector: matchLabels: app.kubernetes.io/name: external-secrets-cert-controller podMetricsEndpoints: - port: metrics --- apiVersion: monitoring.coreos.com/v1 kind: PodMonitor metadata: name: external-secrets namespace: external-secrets labels: app.kubernetes.io/name: external-secrets spec: selector: matchLabels: app.kubernetes.io/name: external-secrets podMetricsEndpoints: - port: metrics

Finally we add a PrometheusRule defining our alert, I am using a severity of warning but feel free to adjust it for what makes sense for your use case.

apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: name: external-secrets namespace: external-secrets spec: groups: - name: ExternalSecrets rules: - alert: ExternalSecretSyncError annotations: description: |- The external secret {{ $labels.exported_namespace }}/{{ $labels.name }} failed to synced. Use this command to check the status: oc get externalsecret {{ $labels.name }} -n {{ $labels.exported_namespace }} summary: External secret failed to sync labels: severity: warning expr: externalsecret_status_condition{status="False"} == 1 for: 5m

If you have done this correctly and have an External Secret in a bad state, you should see the alert appear. Note that by default in the console the platform filter is enabled so you will need to disable it, i.e. turn this off:

And then you should see the alert appear as follows:

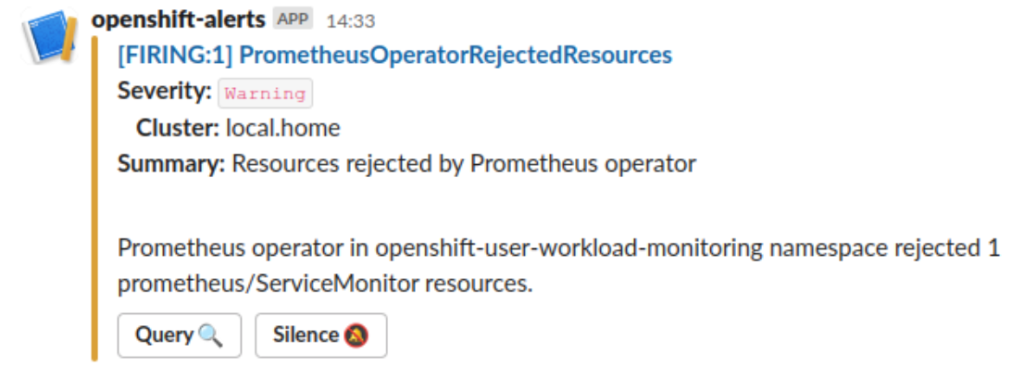

This alert will be routed just like all the other alerts so if you have destinations configured for email, slack, etc the alert will appear in those as well. Here is the alert in my personal Slack instance I use for monitoring my homelab:

That’s how easy it is to get going, easy-peasy!